Data Engineering

Dealing With Large Scale Data - Data Lakes, Pipelines & Platforms

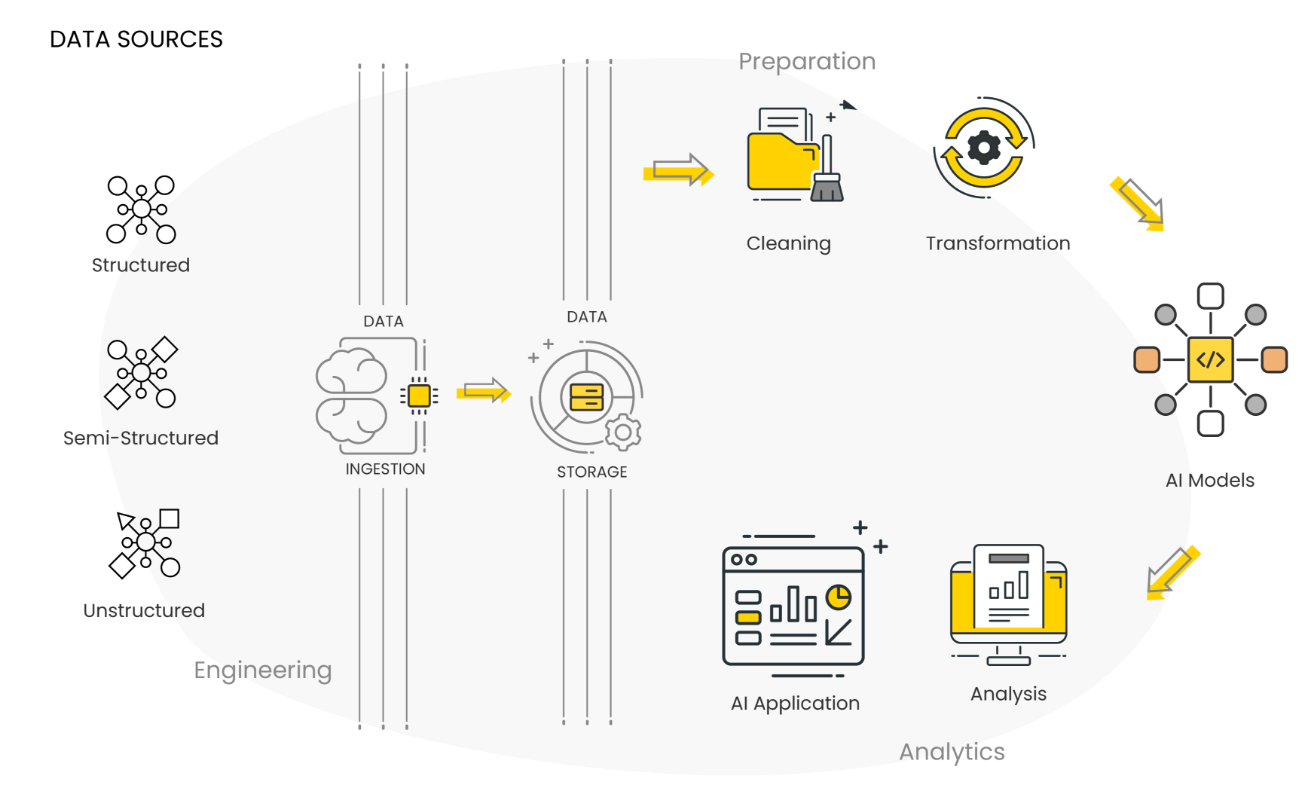

Data Engineering helps in determining the relevant datasets & preparing the data for consumption by AI Solutions to provide valuable insights to business. Data engineering constitutes almost 60% of work in AI Solutions. We, at RandomTrees help get your data AI-ready. Harness evolving data sources by automating & building robust capabilities for data cleaning and harmonization to feed machine learning models.

How Big Data Engineering Benefit You?

Organizations have to help businesses by building robust capabilities to deal with the volume, velocity, veracity, and variety of data. They have to make this data available for business users to consume. Data Scientists, with the constraint of handling massive data taken care of, will have the opportunity to work on these comprehensive data sets, derive valuable insights for business teams to implement decisions. This significantly increases the business value that can be realized.

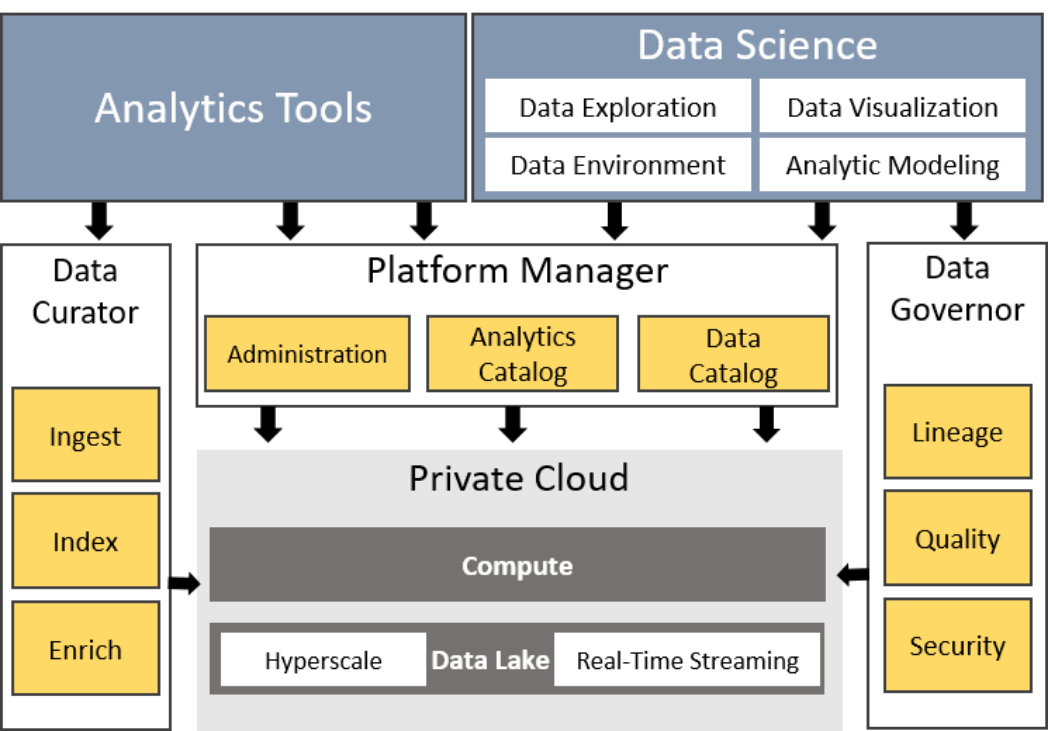

Data Engineering: Frameworks

Data Curation

Assist with identifying data sources, verify, cleanse, transform and integrate datasets to make them ready for ML model consumption.

Data Governance

Provide data governance framework that helps organizations to take care of the data they currently have, get more value from that data, and bring high visibility of data to users

Data Lakes & Virtual Warehouses

Assist in powering new Insights with a high performing Data Lakes.

Data Management

Suggest and help to setup data platforms and tools to manage data.

Data Curation

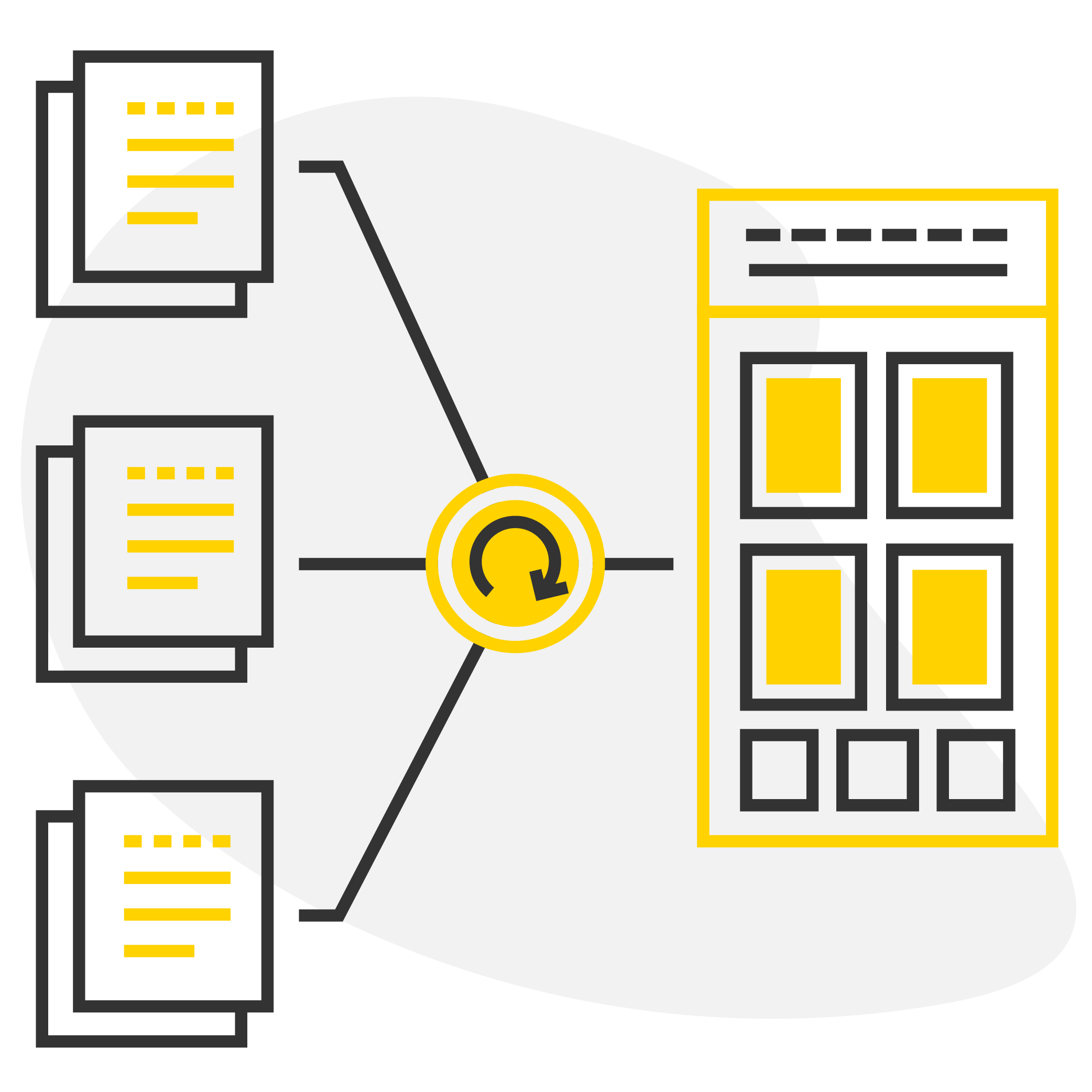

RandomTrees Data Engineers will help identifying data sources, verify, cleanse, transform and integrate datasets to make them ready for ML model consumption

Data Governance

RandomTrees data governance framework will help organizations to take care of the data it has, get more value from that data, and make important aspects of that data visible to users

Data Lakes

RandomTrees assists in powering new Insights with a high performing Data Lakes

Data Management

With its unique data strategy, RandomTrees will suggest and help to setup data platforms and tools to manage data

Data Engineering: Strategy Development

As part of strategy development, We assist with following:

Help to choose between open source vs vendor based platforms

Assist in choosing the right Hosting Strategy (on-premise vs. cloud).

Suggest overall data architecture & Big data ecosystem.

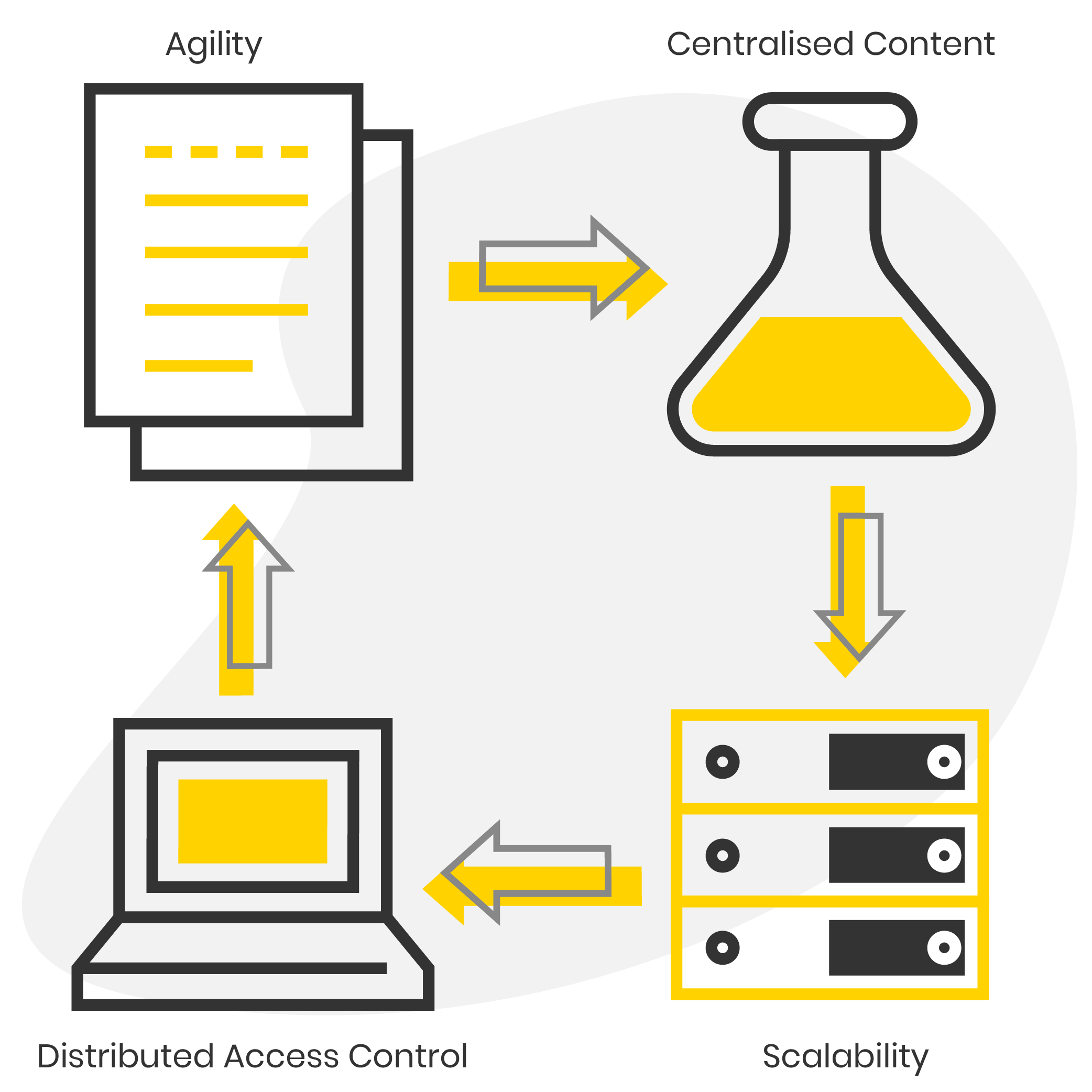

BIG DATA ARCHITECTURE

Key Engineering Aspects

Data Factories & Pipeline

Build complex Industrial Pipeline (IDP’s) which are Data Factory like framework.

Variety of Data (Types, Sources & Volumes).

Faster data processing and handling repetitive tasks much quicker.

Data Lakes &

Virtual Data Warehouses

Design & build complex data lakes to continuously deliver

AI-ready data in near real time.

Bring breadth of data sources together to increase the

Power of AI & Analytics manifold.

Help establish a real time automated process

for data ingestion and processing.

Data Harmonization

Make data clean, compatible, comparable & reliable,

even when it comes from a wide range of unrelated sources.

Ensure data is ready for unsupervised

learning, supervised learning and advanced analytics.

Data Processing

Handle huge volumes of streaming data

(IoT, Social media such as Twitter, Facebook).

Process and analyze in real-time or near real time &

generate valuable insights such as customer sentiments,

Competitor intelligence,Realtime trends.

Help take right approach to improve latency, processing or

ease of operation.

Governance and Security

Democratize data with strong yet flexible governance.

Provide data governance framework that helps organizations

to take care of the data they currently have.

Help make sure data is always secure but also

continuously flowing to your business.

Knowledge Graph

Help you build complex Knowledge Graphs using Graph

DB’s which are fundamental to the modern AI systems.

Knowledge Graphs act like a memory to AI Applications

(Example: real-time recommendation & knowledge sharing apps).

KG’s respond with valuable insights by accumulating

contextual knowledge with each conversation using

connected data to understand concepts, infer meaning.

Future State Architecture

Platforms & Tools